Before the start of the EuroSTAR conference sessions, attendees had an opportunity to participate in a series of half-day tutorials on Tuesday morning. I took part in Michael Bolton’s tutorial, “What’s The Problem? Delivering Solid Problem Reports“. It’s a topic which is close to my heart; in fact, it’s a topic I’ll be covering when I present my first-ever workshop at the UK TestBash conference in 2015 – so I was very excited to pit my wits and ideas against Michael’s.

We were hands-on from the beginning, as we began exploring applications on our laptops and mobile devices, to find anything we could classify as a problem, and then produced a bug report on the problem that we found. We then exchanged these bug reports within the class, and checked to see whether our colleagues’ bug reports gave us enough information to reproduce the problem for ourselves.

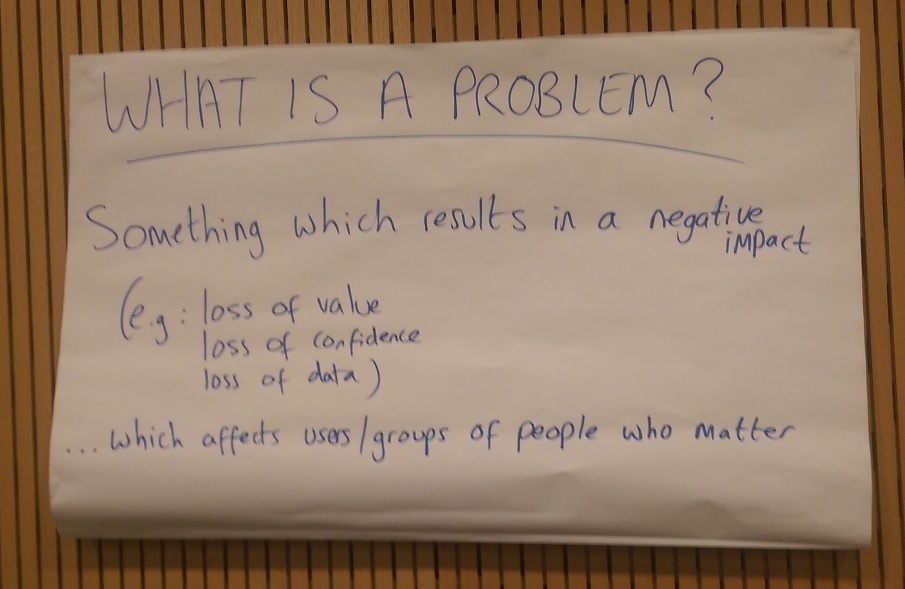

From this, we broke into groups and sketched our own ideas of what constitutes a problem. My group’s definition is embedded below. (Isn’t it funny how I ended up wielding the pen again? I’m going to get myself a reputation at this rate!)

As a class, we moved around the room and compared the definitions. We eached touched on some similar points: problems are emotive, and describe the relationship between products and people (and how these relationships can break down). As Jerry Weinberg says, decisions about quality are inherently political and emotional. (As human beings, we like to appear rational, but this in itself is inherently irrational!)

We discussed a few of the classic bug reporting tropes, which survive mostly due to tradition rather than criticality. For example, we’ve all been taught about the importance of including an “Expected Result” and an “Actual Result” within our reports. But who are we (as testers) to say what is expected? We are rarely the end-users of our own product, so our expectations are relatively unimportant! And as testers aren’t the sole architect of the product, it’s not our role to state what that result should be. We learned to look more at desires than expectations.

In the tutorial, we focused instead on the use of heuristics as a fallible method of solving a problem or making a decision. We looked at the consistency heuristic from the Rapid Software Testing methodology, using the FEW HICCUPPS mnemonic as a guide to evaluating whether or not a particular issue is a problem.

Speaking of mnemonics, we also looked at a new mnemonic which Michael is currently trialling, as a rule of thumb for constructing problem reports: “PEOPLE WORKing” –

- Problem – a summary of what you’ve observed (relationship between perceived/desired)

- Example – illustration of the problem

- Oracle – what can you apply to the situation to tell whether it could be a problem

- Polite – use of language. (“Nobody likes to be told their baby is ugly!”)

- Literate – comprehensible, and effectively communicating the “story” of the problem and its “characters”

- Extrapolation – revealing an acronym within the mnemonic, this is related to Cem Kaner’s RIMGEA (http://theadventuresofaspacemonkey.blogspot.ie/2014/04/rimgea.html) mnemonic

- Workaround – is there an alternative solution which lessens the severity of the problem

We explored each of the above points in far more detail than I can explain here. I’d be happy to dicuss this in more detail with you, and I hope that Michael will publish his own article on PEOPLE WORKing in the future.

There was some interesting discussion about effective note-taking (testing is a science, and you can’t be a good scientist if you don’t keep good notes) and how we can keep track of bugs, issues and risks as we test, so that we can deliver valuable information to our product owners.

As is usual for one of Michael’s classes, I also came away with an interesting reading list! Reading is a real passion of mine, and the breadth and depth of Michael’s literary background never ceases to amaze me, and constantly feeds my own Amazon wishlist. From this session, I’ve resolved to read:

- Billy Vaughn Koen, “Discussion of the Method” (2003)

- Antonio Damasio, “The Feeling of What Happens” (1999)

- Jerome Groopman, “How Doctors Think” (2007)

All in all, I can’t believe how much we squeezed in to a half-day session, and it’s given me a whole host of extra avenues to explore under my own steam. It reinforced my own belief that expertise in bug reporting isn’t inherent, but that it can certainly be learned, and I learned some new ways in which I could tune my own problem reports.